Hi, i'm wondering if anyone can help with this? I have a more specific example.

I would like to use the xml to calculate the projection from a pixel in a 2d image (u, v) to a location in the point cloud (X, Y, Z). In the files i have, a specific example is getting from pixel (2521.209961, 2522.959961) in image DJI_0217 to point (105.63217, -10.47468, 243.83644). The xml files corresponding to this camera are at the bottom of this post.

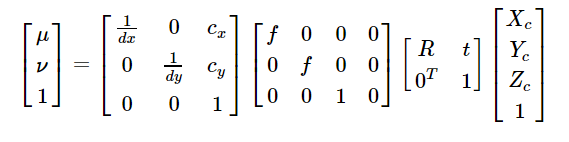

I have found a post which i think outlines what i need to do

https://programmer.group/xml-interpretation-of-agisoft-photoscan-mateshape-camera-parameters.html . The equation they give is

I'm unsure what parameters below should be used for dx, dy. And also R - the rotation matrix. Should this be the transforms for the specific camera, or the chunk transform matrix? I've tried a number of different combinations using the example points, but can't get them to match. I've also tried in reverse to go from 3d to 2d, following this post

https://www.agisoft.com/forum/index.php?topic=6437.0, but again am unclear on where i'm going wrong with picking values from the xml. Any help would be very appreciated! Thanks for your time, sorry for the long post

-------------------------------------------------------------------------------

for the calibration i have...

<calibration class="adjusted" type="frame">

<resolution height="3000" width="4000" />

<f>3118.23872343487</f>

<cx>21.6929492116479</cx>

<cy>-15.0408107258771</cy>

<k1>0.0303345331370718</k1>

<k2>-0.0667270494782827</k2>

<k3>0.0653506193462477</k3>

<p1>-3.94887881926124e-005</p1>

<p2>-0.000593847873789833</p2>

</calibration>for the specific camera mentioned in the example i have...

</camera>

<camera id="215" label="DJI_0217" sensor_id="0">

<transform>-9.9845367866015977e-001 -4.7360369045444578e-002 2.9107507860923802e-002 2.0635994236918069e+000 -5.0010632995164908e-002 9.9389702762500165e-001 -9.8324132671528716e-002 -6.4615532779234619e-001 -2.4273198335146698e-002 -9.9627776859993980e-002 -9.9472866547642924e-001 -2.8160118158196525e-003 0 0 0 1</transform>

<rotation_covariance>8.4460505284871782e-006 -1.4902639446243033e-006 -1.1296027835376122e-008 -1.4902639446243037e-006 2.1520441968010174e-005 -6.9017167960388795e-007 -1.1296027835376149e-008 -6.9017167960388763e-007 5.7679957063865655e-006</rotation_covariance>

<location_covariance>1.2021170339207746e-003 -1.8157680112466801e-006 -1.3976334597317290e-005 -1.8157680112466801e-006 1.2033529966674376e-003 4.4792361160838019e-005 -1.3976334597317290e-005 4.4792361160838019e-005 2.0488157555429709e-003</location_covariance>

<orientation>1</orientation>

<reference enabled="true" x="105.632175972222" y="-10.4748751388889" z="313.197" />

</camera>the chunk transform is

<transform>

<rotation locked="true">-8.1053840961487511e-001 -5.3146724142603119e-001 -2.4610984911212641e-001 -3.3158710760897486e-001 7.0029820158928557e-002 9.4082188237520392e-001 -4.8278098202653230e-001 8.4417912529244210e-001 -2.3298954443061240e-001</rotation>

<translation locked="true">-1.6902721015857060e+006 6.0408286681674914e+006 -1.1519789192598241e+006</translation>

<scale locked="true">1.0044411226433386e+001</scale>

</transform>which i understand can be made into a 4x4 tranform matrix by doing rotation times scale, and combining with translation, i.e to get

Matrix([[-8.141381100991113, -5.33827552626121, -2.4720285313576693, -1690272.101585706],

[-3.330597266208162, 0.7034083117894531, 9.45000187740369, 6040828.668167491],

[-4.8492507157758356, 8.479282283208121, -2.3402427957204432, -1151978.919259824],

[0.0, 0.0, 0.0, 1.0]])